AI Lightweighting Competition: Enerzai's Breakthrough on the Global Stage with 1.58-Bit 'Extreme Quantization'

AI Lightweighting Competition: Enerzai's Breakthrough on the Global Stage with 1.58-Bit 'Extreme Quantization'

Posted February. 10, 2026 09:57,

Updated February. 10, 2026 09:59

- The company has demonstrated its capabilities on global stages like CES 2026and Synaptics Tech Day, showing that high-performance voice and language models can run autonomously on low-spec edge devices without a server connection.

- Following the successful deployment of its models on 2 million LG Uplus set-top boxes, Enerzai is expanding into the autonomous driving and industrial sectors while strengthening its global footprint through partnerships with Arm, Advantech, and Synaptics.

In deep learning and AI, ‘quantization’ refers to the process of converting a model's weights and activations from high precision formats like 32-bit floating-point (FP32) to lower precision formats like 8-bit integers (INT8).

For example, if an FP32-based model is like a detailed map showing every road and building address, INT8 is like a map showing only major buildings and large roads. The INT8 map is lightweight and compact, making it easy to carry and much faster for navigation. While it naturally struggles with finding detailed information, it poses no major problem for reaching the destination. AI model quantization works on a similar principle.

Quantizing an AI model reduces the computational load required for operation and speeds up processing. It also decreases the model's storage space and memory usage, improving energy efficiency. While accuracy inevitably decreases, minimizing this loss is the competitive edge for AI quantization technology companies, and the competition is intensifying. Initially starting with 16-bit or 8-bit quantization, extreme quantization below 2 bits has recently begun to emerge.

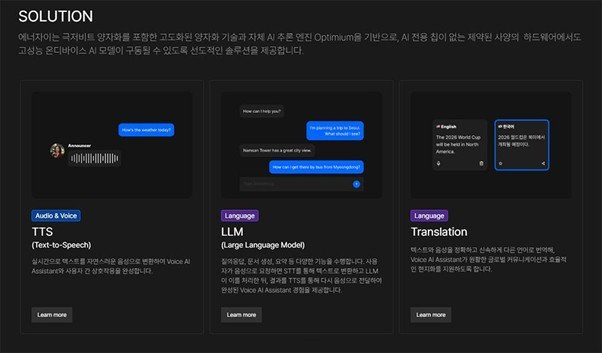

AI model quantization is a technology that reduces model size and memory usage while maintaining precision as much as possible / source=Enerzai

Microsoft unveiled its BitNet b1.58 2B4T model in April last year, featuring extreme 1.58-bit quantization. NVIDIA is supporting the market by acquiring AI quantization technology companies Deci and OmniML, while Amazon acquired Perceive and Red Hat acquired Neural Magic, each securing a quantization ecosystem. Semiconductor manufacturers for small devices, such as Arm, Qualcomm, and MediaTek, which are closely related to model quantization, are also investing significant effort into technical support and ecosystem expansion.

Interestingly, a handful of big tech companies are not monopolizing the ecosystem; instead, diverse tech startups are collectively building it. This is due to the sheer breadth of AI application areas and the differing application targets for each company. In Korea, startups like Enerzai, Squeezebits, Nota, and Clika are making significant strides in the AI model quantization field. Each company is achieving remarkable growth. We examine the trajectory of AI model quantization companies through the case of Enerzai.

Enerzai Targets Global Market with 1.58-Bit Quantization

Enerzai's quantization technology and AI inference engine Optimium enable ultra-low-bit quantization of diverse AI models / source=Enerzai

Enerzai is challenging the AI quantization market based on its technology that quantizes AI models to ultra-low bits while minimizing accuracy loss, and its self-developed AI inference optimization engine 'Optimium'. Optimium can flexibly generate 1.58-bit kernels, supporting even 1.58-bit extreme low-bit precision models. When applied, this technology minimized accuracy loss to less than 0.39% compared to the baseline while improving speed by 2.46x for OpenAI's Whisper Small speech recognition model. It also reduced memory usage—a major bottleneck when running speech and language AI models in edge environments—by 77.3%.

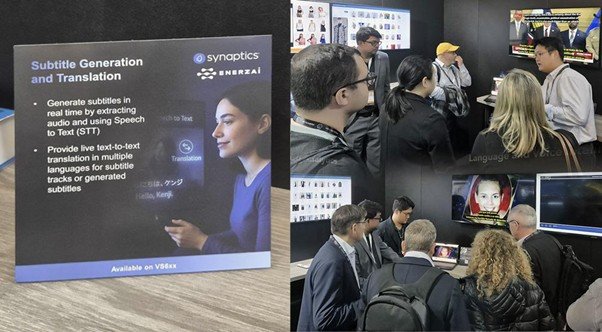

Enerzai participated in the Synaptics booth at the International Broadcasting Convention (IBC) in Amsterdam from September 12 to 15 last year, introducing its real-time speech-to-subtitle translation technology implemented via on-device AI / source=IT DongA

Enerzai participated in Synaptics Tech Day held in San Jose, California, USA, on October 15 last year, demonstrating a 1.58-bit AI model on the Synaptics SL1680 embedded IoT (Internet of Things) SoC (System on Chip) for Synaptics customers and partners. This device is primarily used in low-power applications such as consumer electronics, industrial control systems, digital signage, and home security gateways. The key point is that it runs high-performance voice and language AI models autonomously, without requiring a server connection, even on relatively low-spec devices.

CEO Han him Jang is presenting alongside global semiconductor companies STMicroelectronics and Microchip at embedded world North America / source=IT DongA

Aditionally, dhe participated as a panelist at an AI symposium hosted by Iterate.ai, a U.S. company operating an industrial agent AI platform. He also completed the AI Innovation Alliance (AIIA), a localized AI accelerating program organized by the Ministry of Science and ICT and jointly operated by the New York University Stern School of Business, the National IT Industry Promotion Agency (NIPA), and the Korea Software Industry Association (KOSA). This program also served as a stepping stone for entering the U.S. East Coast market by enhancing local market strategies and facilitating investor networking. Additionally, Energize introduced its technological capabilities at the 'K-Innovator Pitch Night' and 'Korean Founders Meetup in Stanford' events hosted by the U.S. IT media outlet TechCrunch.

In November, the company secured a booth at embedded world North America in Anaheim, California, and presented on the topic "Extreme Quantization Techniques for Ultra-efficient Embedded AI Inference."

Beyond Korea, it is growing as a key player in the Asian market

Enerzai is expanding its reach in the Asian market, including Korea. Selected for the Arm AI Partner Program in 2022, Enerzai maintains a collaborative relationship with Arm. Last October, it participated in a panel talk titled 'Expanding On-Device AI Across the Diverse Arm Ecosystem' at Arm Unlocked Korea 2025, an event featuring domestic and international Arm partners.

In November, it visited Taiwan to conduct a workshop with Advantech at an event hosted by the Edge AI Foundation, an IoT association, on the topic "Optimizing Edge AI: Lightweight Models and Scalable Architecture."

Han him Jang , CEO of Enerzai, conducting a Q&A session during his presentation at the Edge AI Foundation event / source=Enerzai

During the panel discussion, alongside Qualcomm, Renesas, and Advantech, they discussed the topic 'Applied Edge AI - Orchestrating Edge AI: From Silicon to Scalable Solutions'. Following the event, a Memorandum of Understanding (MOU) was signed regarding cooperation in the development and deployment of edge AI solutions, specifically related to Advantech's software development environment and operating system, WEDA.

Enerzai CEO Han him Jang(second from left) introduces Enerzai's quantization technology to visitors / source=Enerzai

Aligning with the expansion of AI users, Enerzai also participated in the Consumer Electronics Show (CES 2026) held in Las Vegas, Nevada, USA, from January 6 to 9 this year. At the event, it showcased applications of its 1.58-bit quantization technology: implementing real-time voice-based smart light bulb command control using STT (Speech-to-Text) and NLU (Natural Language Understanding) models on a Raspberry Pi based on an Arm Cortex-A76 CPU, and demonstrating real-time subtitle generation and translation capabilities on devices powered by an Arm Cortex-A73 CPU and Synaptics NPU.

Furthermore, on January 15th, the company officially joined the Korea Association of Autonomous Mobility Industry, establishing a foothold in South Korea's autonomous driving industry. On February 5th, it participated as a Silver Sponsor in the Korea Special Interest Group on Programming Languages (SIGPL) Winter School, showcasing its self-developed 'Nadya' programming language and building relationships with the programming language academic and industrial communities both domestically and internationally.

Accumulating adoption cases, the AI model quantization industry looks even more promising for 2026

Thanks to AI model quantization reducing model size, voice command AI can now be used even in set-top boxes / source=LG Uplus

Since last year, Enerzai has been steadily advancing commercialization while establishing cooperative frameworks and introducing its technology across Korea and overseas markets, with a focus on 2026. Late last year, Enerzai's quantized voice and language AI models were commercially deployed on 2 million LG Uplus IP TV set-top boxes. As more companies adopt AI, demand for AI model quantization technology will naturally increase.

While the article cited only one example from Enerzai, all domestic and international AI quantization companies are already receiving numerous requests for collaboration and technology partnerships in their respective fields. This is only natural, as the advancement of extreme quantization technology offers a technical solution for enjoying more sophisticated AI in various everyday devices and, furthermore, for utilizing AI throughout our daily lives. This year appears poised to be the year South Korea is recognized as the leading nation in AI model quantization technology.

By Si-hyeon Nam (sh@itdonga.com)

![[단독]與 친명계 70여명 의원모임 결성…사실상 ‘반정청래’ 결집](https://dimg.donga.com/c/138/175/90/1/wps/NEWS/IMAGE/2026/02/10/133333054.1.jpg)

![체중 감량이 목표라면, 운동이 생각만큼 효과적이지 않은 이유[바디플랜]](https://dimg.donga.com/c/138/175/90/1/wps/NEWS/IMAGE/2026/02/10/133333458.3.jpg)