HyperAccel Gains Prominence with IEEE's Top Paper Award and AWS Instance Listing.

HyperAccel Gains Prominence with IEEE's Top Paper Award and AWS Instance Listing.

Posted September. 23, 2025 09:50,

Updated September. 23, 2025 09:54

of KAIST in 2023, has gained significant attention for its innovative AI

accelerators.

- The company's LPU (Large Language Model Processing Unit) won the 2024

Best Paper Award from the IEEE Computer Society and was recently listed on

AWS Marketplace, making it the first Korean AI accelerator company to achieve

this.

- HyperAccel's advancements in AI semiconductor technology have positioned it

as a key player in the global market, with plans to introduce its next-generation

ASIC, 'Bertha,' in the first half of next year.

According to the report '2025-2030 Artificial Intelligence (AI) Data Center Market Size, Share and Trends' released by market research firm Market & Market in December last year, the AI data center market size in 2025 is expected to be approximately $236.44 billion (about 328.17 trillion won), and it is expected to grow by 31.6% annually by 2030 to a maximum market of $933.76 billion (about 1,296.05 trillion won). Although there are differences in forecasts among market research companies, there is no disagreement that the market will grow to 800 billion dollars (about 1110.4 trillion won).

Market & Market believes that the main driver for the explosive growth is the increase in AI computing fueled by industry-wide digitalization, expanding AI investments by governments worldwide, and the need for extensive data analysis and high-performance infrastructure enabled by AI. The concern is that the infrastructure demand for AI data centers will be concentrated in NVIDIA's graphics processing units (GPUs), and the global AI infrastructure imbalances show in the price volatility in high-bandwidth memory (HBM), a core component, and the concentration of demand from Big Tech.

HyperAccel's AI accelerator is an LPU (LLM Processing Unit) specialized in LLM (large language model) processing / Source=Gemini Image Generation

As a result of this situation, big tech companies are focusing on designing their own semiconductors early on or securing AI semiconductors exclusively for inference. Google has been developing the Tensor Processing Units (TPUs) since 2017 and using them in its cloud, and AWS also has various types of AI semiconductors such as Graviton, Trainium, and Inferencia. Recently, Open AI, not a cloud service company, teamed up with Broadcom to launch AI chips, and the desire to secure AI semiconductors has spread to almost all AI companies.

Companies around the world are working to secure semiconductors... Domestic AI semiconductors have considerable potential

While AI companies around the world are focusing on securing their own semiconductors, Korean companies are targeting the global market with self-designed semiconductors. Notable AI semiconductors in Korea include FuriosaAI's second-generation neural processing unit (NPU) RNGD and Rebellion's Rebel Quad, both receiving high praise. RNGD, unveiled at Hot Chips 2024, is recognized as suitable for high-efficiency inference tasks. Rebellion unveiled its chiplet-based AI semiconductor 'Rebel Quad' at Hot Chips 2025 in August this year. Chiplets are packaging technologies that weave together semiconductors manufactured by different processes. In addition, Mobilint and DeepX are also targeting the market with semiconductors for servers and devices for edge AI.

Canada's Tentorrent's RISC-V-based AI accelerator / Source=Tentorrent

As domestic AI semiconductor companies unveil major new products starting this year, competition for market attention is fierce. Moreover, they will have to compete with AI semiconductor companies from Canada and the United States, such as Tenstorrent, SambaNova, Cerebras, and Groq based in Silicon Valley. These companies have home-field advantage, so Korean companies must redouble their efforts.

### HyperAccel papers and products are the first to attract attention overseas

However, even as the launch of next-generation semiconductors approaches, one company is already capturing widespread attention.

It is HyperAccel, an AI semiconductor company founded in 2023 by Professor Joo-Young Kim of KAIST's Department of Electrical Engineering. HyperAccel drew immediate industry attention after its founding by launching ‘Orion,’ an LPU (Large Language Model Processing Unit) based on FPGA (field-programmable gate array) technology developed in collaboration with AMD. The company is currently developing ‘Bertha,’ an ASIC (application-specific integrated circuit) based on a 4-nanometer process, targeting a launch in the first half of next year.

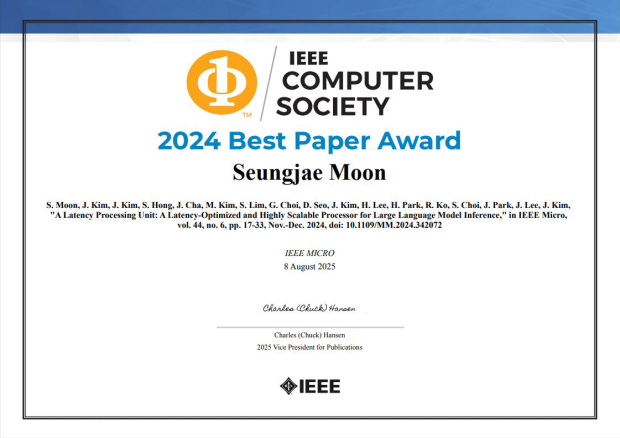

HyperAccel's 'LPU: Latency Optimization and Highly Scalable Processor for LLM Inference' published last year won the Best Paper Award in this year's IEEE Micro journal / Source=IEEE Computer Society

The potential of HyperAccel’s LPU first gained attention in academia. The paper 'LPU: Latency Optimization and Highly Scalable Processors for Large Language Model Inference' published by HyperAccel last year won the 2024 Best Paper Award by Micro journals of the IEEE Computer Society last month. This is the most prestigious paper award ever received by a domestic AI semiconductor company.

The IEEE Computer Society, which awarded the Best Paper Award, is the largest of the 39 technical societies under the IEEE with 380,000 members in 150 countries, and along with the ACM (Association for Computing Machinery), it is considered one of the two major pillars of global computer societies. The parent organization, IEEE, is an association of electrical and electronics engineers established in the United States in 1962 and has about 500,000 engineering and technical professionals as members.

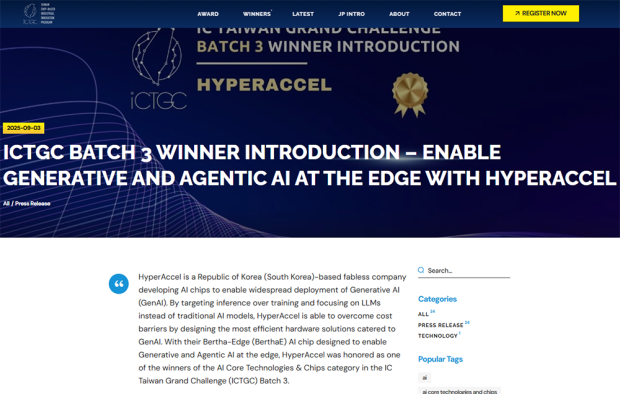

It was also selected as one of the 8 best companies in the IC Taiwan Grand Challenge awarded by Taiwan's National Science and Technology Council / Source=ICTGC

Adding to its recent accolades, HyperAccel secured a significant award on September 1st in the "AI Core Technology and Chip" category at the ‘IC Taiwan Grand Challenge,’ hosted by Taiwan's National Science and Technology Council (NSTC). The IC Taiwan Grand Challenge recognizes leading companies across various sectors, including AI core technology and chips, smart mobility, manufacturing, healthcare, and sustainability.

The AI Core Technology and Chip sector drew particular attention as Taiwan rapidly solidifies its position as a global powerhouse for AI semiconductors. HyperAccel shared the honor with fellow companies DeepMentor and femtoAI. In its citation, the NSTC highlighted HyperAccel's success in boosting cost-efficiency with its semiconductor specifically designed for LLM data processing as a key reason for the selection.

The recognition from the Taiwanese government is particularly meaningful. Taiwan is already home to TSMC, an undisputed heavyweight in the global semiconductor industry. Furthermore, Nvidia, which commands over 90% of the current AI semiconductor market, is establishing a second headquarters on the island, designating it as a strategic forward base. This achievement by HyperAccel is all the more notable as it comes at a time when AI semiconductor firms, including South Korea's own DeepX which recently established a local branch, are flocking to Taiwan.

LPU listing on AWS Cloud, accelerating entry into the US market

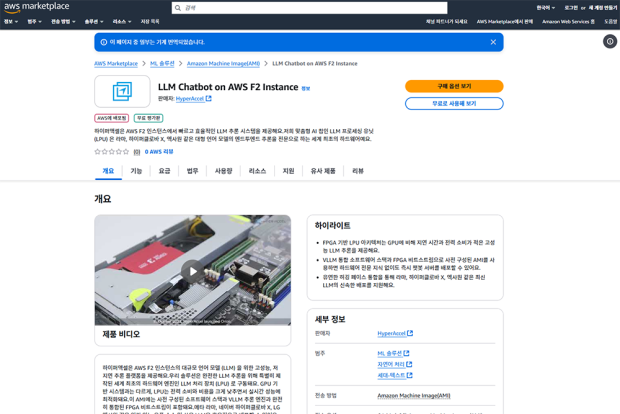

HyperAccel instances listed on AWS Marketplace / Source=AWS

In terms of commercialization, it is also one step closer. HyperAccel recently launched an LPU commercial service through Amazon Web Services' F2 instance, the first among AI accelerator companies in Korea. Customers can rent an FPGA-based LPU from AWS Marketplace to perform machine learning solutions, natural language processing, and generation-to-text processing.

The AI models that can be operated in the initial version include: ▲ Meta Llama 3.1-8B Instruct, 3.2-1B, and 3B Instruct ▲ Naver HyperClova X-SEED-TEXT-Instruct-0.5B and 1.5B ▲ LG AI Research ExaOne-3.5-2.4B and 7.8B Instruct models. With the addition of domestic AI accelerators as a commercial service, various domestic and international companies can build LPU-based services with HyperAccel FPGAs.

HyperAccel will also attend Supercomputing 2025 (SC 25), which will be held in St. Louis, Missouri, USA, between November 16 and 21 this year, to introduce LPU-based technology to the US market.

In 2026, competition among global AI hardware companies will be in full swing

The core of the AI accelerator market in 2023 was the training work to build AI models, but due to the high unit cost of GPUs and strong demand, there is a trend of using separate AI accelerators for inference work. Therefore, domestic and international AI semiconductor companies are also targeting the inference market suited to their respective applications rather than directly competing with Nvidia, and HyperAccel is one such company.

On September 9, NVIDIA unveiled its next-generation AI accelerator, the Rubin CPX. Although it will not release until late next year, it is already adding tension to the market / Source=Nvidia

However, even if companies focus solely on their respective fields, the number of players is not small. Competition is expected to intensify significantly in 2025 compared to this year. NVIDIA will ship its next-generation GPX based on the Rubin architecture in the second half of next year. Cerebras in the US will begin commercial operation of its wafer-scale engine-3, a supercomputer-grade semiconductor. Tenstorrent, led by Jim Keller, is also likely to unveil new chipsets like Quasar and Grendel next year. FuriosaAI's RNGD will see a significant increase in adoption, and Rebellion's Rebel Quad will also see a rise in firm contracts.

HyperAccel Bertha also faces a market where survival requires competitiveness as strong as market expectations. Fortunately, unlike other products, HyperAccel focuses on LLM inference and uses LPDDR memory, enabling operations in relatively low-power environments. It aims to create clear differentiation and target specialized markets inaccessible to existing high-performance semiconductors. This year, HyperAccel has clearly established its presence both in academia and within the AI semiconductor industry. In 2026, its narrative will truly begin.

By Si-hyeon Nam (sh@itdonga.com)

![‘건강 지킴이’ 당근, 효능 높이는 섭취법[정세연의 음식처방]](https://dimg.donga.com/c/138/175/90/1/wps/NEWS/IMAGE/2026/01/18/133181291.1.jpg)

![납중독 사망 50대, 원인은 ‘낡은 보온병에 담은 커피’[알쓸톡]](https://dimg.donga.com/c/138/175/90/1/wps/NEWS/IMAGE/2026/01/19/133182417.3.jpg)