Google DeepMind’s new AI can predict 3D images from 2D ones

Google DeepMind’s new AI can predict 3D images from 2D ones

Posted June. 15, 2018 09:26,

Updated June. 15, 2018 09:26

Humans view objects from various angles and synthesize their images, which they perceive as a three-dimensional shape. With enough experiences, they can predict the three-dimensional shapes of objects without having to turn them around for viewing. The same applies to when humans to recognize the structure of a space or the location of an object.

Google's DeepMind, which created stirs with its AlphaGo, a computer program that plays the board game Go, has made headlines with its new artificial intelligence (AI) system that the company claims to have humans’ observational abilities.

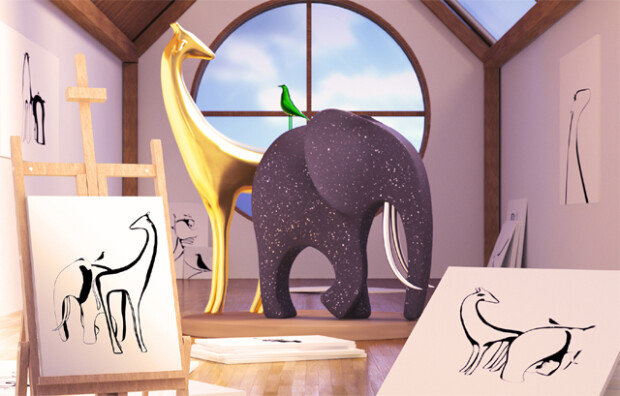

The AI system, dubbed the Generative Query Network (GQN), can render three-dimensional images by predicting the overall cubic structure of a space and an object from two-dimensional scenes seen from limited angles, allowing users to take a look at every aspect of an object seen from every angle that could not be seen from the viewing angle. The AI will likely pave the way for the development of robots capable of recognizing the surroundings and react actively and self-driving vehicles. Ali Eslami, a member of a researchers group at Google DeepMind, published such results of the team’s study on journal Science. DeepMind CEO Demis Hassabis also participated in the study as a co-author. Eslami said that the AI was programmed to recognize three-dimensional spaces in the same way that humans do.

With the previous AI visual systems, researchers had to enter various information contained in difference scenes together with images of the same object seen from various angles. The older systems also required a huge amount of study data including the direction of a scene, the spatial location of an object and the range of pixels for particular objects. It took too much time to create the study data, and the system could not properly recognize objects with complex spaces or curves.

However, the GQN does not depend on study data fed by humans. Rather, it can grasp the three-dimensional structure of an object as long as it can observe the object from various angles. The system can create various images that it did not even observe. The AI can also create a three-dimensional map of a maze after observing every corner of it and examine the space in video. “The system is a result of going beyond the fundamental limits of machine learning that required humans to educate the system with every little detail,” said Lee Kyoung-mu, a professor of electrical and computer engineering at Seoul National University. “We can say that it has come closest to humans’ ability for perception.”

Since raising many eyebrows with its AlphaGo Zero, which beat human Go champions without any defeat through its self-learning capabilities in October last year, DeepMind has been expanding the areas of its research. Last month, it published a paper on journal Nature after working with researchers from the London College, England to develop an AI system capable of mimicking mammals’ pathfinding abilities.

kyungeun@donga.com

Headline News

- Med professors announce intention to leave hospitals starting Thursday

- Bridge honoring Sgt. Moon Jae-sik unveiled in Pennsylvania

- Chief of Staff Chung tells presidential secretaries to stay away from politics

- US FTC bans noncompete agreements

- N. Korea launches cyberattacks on S. Korea's defense companies